Mikayla Peak

BLOG 05

Friday 30th August

Context

In week five, I focused on creating a first real iteration of the toolkit. Building on the brainstorming and drafting process, I aimed to create a more comprehensive version that I can continue refining. Keeping the end-users in mind, I wanted to ensure that the toolkit would be functional, accessible, and aligned with the DEI principles I’ve been focusing on.

Action

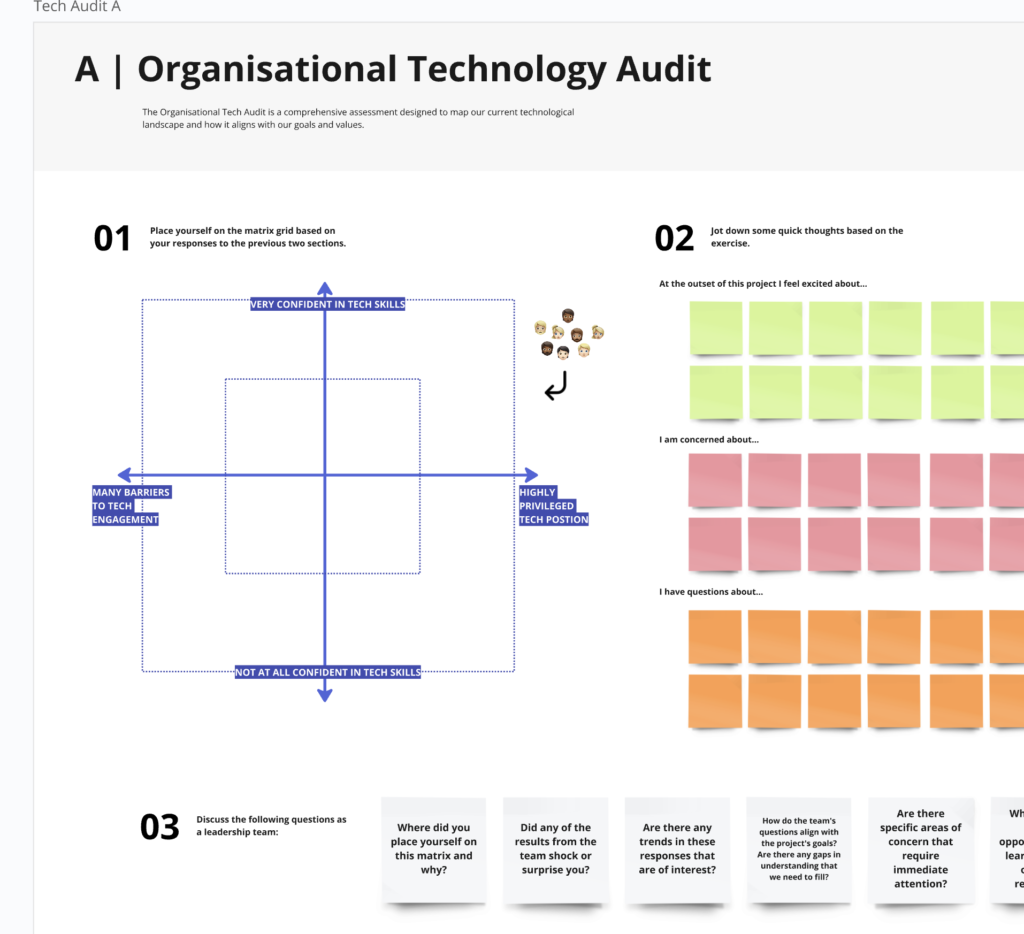

Using the draft from previous weeks, I developed the first iteration of the toolkit. During this phase, I paid close attention to the needs of my users, which helped shape many of the features and resources in the toolkit. This included components such as a DEI self-assessment, technology audit templates, and an implementation plan. To ensure the toolkit’s practicality, I structured it to include checkpoints for both individual reflections and group brainstorming sessions. These checkpoints are designed to help teams regularly assess progress and alignment with DEI goals.

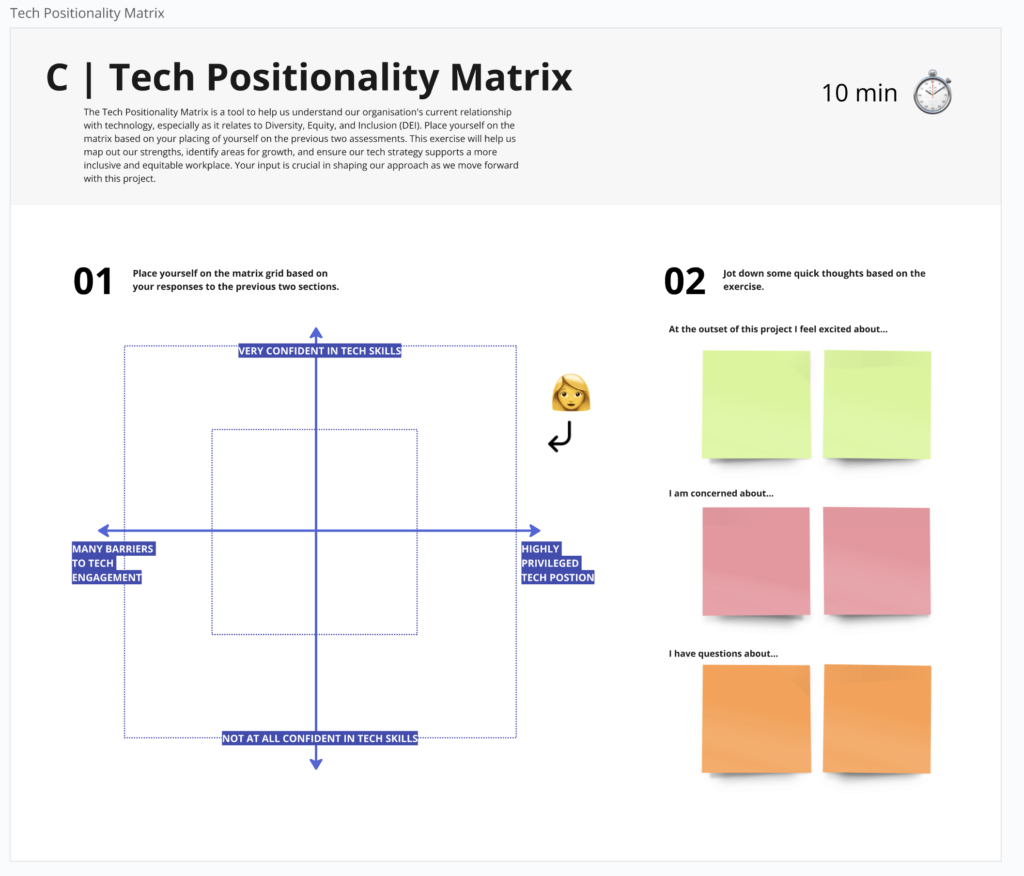

One of the key features I incorporated is a Tech Positionality Matrix. This matrix will allow organisations to visually map out where they stand in terms of DEI technology integration, helping them track tangible progress over time. This will provide a clear picture of where they need to focus their efforts, making the toolkit not just a theoretical resource but one that actively guides organisations to measurable outcomes. Alongside the matrix, I started thinking about the importance of creating tangible metrics for organisations to track their progress and creating prompts for goal setting for KPIs around this topic.

Result

The result is a version that I’m quite satisfied with for a first iteration. It’s a more concrete structure than the draft version, but I also acknowledge that there are several key differences. Some ideas evolved naturally during the process, and I learnt to stay flexible in the design while keeping the users at the center of my decision-making process. The toolkit now provides a structured yet adaptable framework, with tangible metrics for organisations to track.

However, as I developed the toolkit further, I had to be conscious of finding the right balance between depth and simplicity. One challenge I encountered was ensuring that the toolkit was detailed enough to be useful and provide value, but not so complex that it becomes overwhelming or too time-consuming for organisations to implement. This is especially relevant for smaller organisations that may not have the same resources as larger ones. I’m learning that striking this balance is key to ensuring the toolkit is practical without overburdening its users. As a result, I focused on keeping the steps clear and concise, while still providing the necessary depth to make sure organisations feel equipped to drive meaningful change.

Learnings

One of the major takeaways from this week’s work is realising how dynamic this toolkit will be as it continues to evolve. As I worked on it, I noticed how ideas that seemed fixed during the brainstorming phase adapted as they were put into practice. This iteration process is vital to ensuring that the toolkit remains user-centred and practical. Additionally, I recognised the importance of staying focused on the needs of my users.

Another key learning was understanding how different teams might interpret the toolkit. For example, a larger organisation may approach the DEI assessments and tech audits with a more formal, structured process, while smaller teams might take a more flexible approach. As a result, I’ve started thinking about how to tailor the toolkit to accommodate different organisational sizes and cultures. This realisation has prompted me to consider creating multiple versions of the toolkit—each tailored to specific types of organisations—allowing for scalability while still remaining customisable.